Process Management

Process Management ¶The task_struct is a relatively large data structure, at around 1.7 kilobytes on a 32-bit machine

Allocating the Process Descriptor ¶The task_struct structure is allocated via the slab allocator to provide object reuse and cache coloring.

Prior to the 2.6 kernel series, struct task_struct was stored at the end of the kernel stack of each process. This allowed architectures with few registers, such as x86, to calculate the location of the process descriptor via the stack pointer without using an extra register to store the location.

With the process descriptor now dynamically created via the slab allocator, a new structure, struct thread_info, was created that again lives at the bottom of the stack (for stacks that grow down) and at the top of the stack (for stacks that grow up).

28 struct thread_info {

29 struct task_struct *task; /* main task structure */

30 struct exec_domain *exec_domain; /* execution domain */

31 unsigned long flags; /* low level flags */

32 unsigned long status; /* thread-synchronous flags */

33 __u32 cpu; /* current CPU */

34 __s32 preempt_count; /* 0 => preemptable, <0 => BUG */

35

36

37 mm_segment_t addr_limit; /*

thread address space:

38 0-0xBFFFFFFF for user-thead

39 0-0xFFFFFFFF for kernel-thread

40 */

41 struct restart_block restart_block;

42

43 unsigned long previous_esp; /* ESP of the previous stack in case

44 of nested (IRQ) stacks

45 */

46 __u8 supervisor_stack[0];

47 };

pid_t maximum value is important because it is essentially the maximum number of processes that may exist concurrently on the system.

the administrator may increase the maximum value via /proc/sys/kernel/pid_max.

Inside the kernel, tasks are typically referenced directly by a pointer to their task_struct structure. In fact, most kernel code that deals with processes works directly with struct task_struct. Consequently, it is very useful to be able to quickly look up the process descriptor of the currently executing task, which is done via the current macro. This macro must be separately implemented by each architecture. Some architectures save a pointer to the task_struct structure of the currently running process in a register, allowing for efficient access. Other architectures, such as x86 (which has few registers to waste), make use of the fact that struct thread_info is stored on the kernel stack to calculate the location of thread_info and subsequently the task_struct.

On x86, current is calculated by masking out the 13 least significant bits of the stack pointer to obtain the thread_info structure. This is done by the current_thread_info() function. The assembly is shown here:

movl $-8192, %eax andl %esp, %eax This assumes that the stack size is 8KB. When 4KB stacks are enabled, 4096 is used in lieu of 8192.

Finally, current dereferences the task member of thread_info to return the task_struct:

current_thread_info()->task; Contrast this approach with that taken by PowerPC (IBM's modern RISC-based microprocessor), which stores the current task_struct in a register. Thus, current on PPC merely returns the value stored in the register r2. PPC can take this approach because, unlike x86, it has plenty of registers. Because accessing the process descriptor is a common and important job, the PPC kernel developers deem using a register worthy for the task.

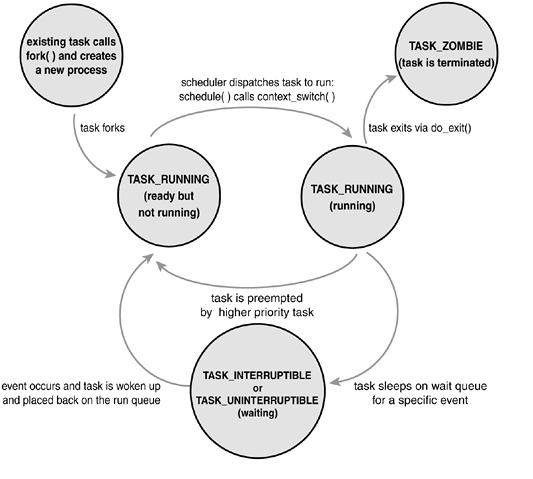

Process State ¶The state field of the process descriptor describes the current condition of the process (see Figure 3.3). Each process on the system is in exactly one of five different states. This value is represented by one of five flags:

[JPG image (31.51 KB)] Manipulating the Current Process State

Kernel code often needs to change a process's state. The preferred mechanism is using

set_task_state(task, state); /* set task 'task' to state 'state' */ This function sets the given task to the given state. If applicable, it also provides a memory barrier to force ordering on other processors (this is only needed on SMP systems). Otherwise, it is equivalent to

task->state = state;In the SMP system. it result in following code

206 /*

207 * Note: no "lock" prefix even on SMP: xchg always implies lock anyway

208 * Note 2: xchg has side effect, so that attribute volatile is necessary,

209 * but generally the primitive is invalid, *ptr is output argument. --ANK

210 */

211 static inline unsigned long __xchg(unsigned long x, volatile void * ptr, int size)

212 {

213 switch (size) {

214 case 1:

215 __asm__ __volatile__("xchgb %b0,%1"

216 :"=q" (x)

217 :"m" (*__xg(ptr)), "" (x)

218 :"memory");

219 break;

220 case 2:

221 __asm__ __volatile__("xchgw %w0,%1"

222 :"=r" (x)

223 :"m" (*__xg(ptr)), "" (x)

224 :"memory");

225 break;

226 case 4:

227 __asm__ __volatile__("xchgl %0,%1"

228 :"=r" (x)

229 :"m" (*__xg(ptr)), "" (x)

230 :"memory");

231 break;

232 }

233 return x;

234 }

235

Process Context ¶Normal program execution occurs in user-space. When a program executes a system call or triggers an exception, it enters kernel-space. At this point, the kernel is said to be "executing on behalf of the process" and is in process context. When in process context, the current macro is valid. Upon exiting the kernel, the process resumes execution in user-space, unless a higher-priority process has become runnable in the interim, in which case the scheduler is invoked to select the higher priority process.

Copy-on-Write ¶Traditionally, upon fork() all resources owned by the parent are duplicated and the copy is given to the child. if the new process were to immediately execute a new image, all that copying would go to waste. In Linux, fork() is implemented through the use of copy-on-write pages. Copy-on-write (or COW) is a technique to delay or altogether prevent copying of the data. Rather than duplicate the process address space, the parent and the child can share a single copy. The data, however, is marked in such a way that if it is written to, a duplicate is made and each process receives a unique copy. Consequently, the duplication of resources occurs only when they are written; until then, they are shared read-only. This technique delays the copying of each page in the address space until it is actually written to. In the case that the pages are never writtenfor example, if exec() is called immediately after fork()they never need to be copied. The only overhead incurred by fork() is the duplication of the parent's page tables and the creation of a unique process descriptor for the child. In the common case that a process executes a new executable image immediately after forking, this optimization prevents the wasted copying of large amounts of data (with the address space, easily tens of megabytes). This is an important optimization because the Unix philosophy encourages quick process execution.

The Linux Implementation of Threads ¶Linux approach to threads contrasts greatly with operating systems such as Microsoft Windows or Sun Solaris, which have explicit kernel support for threads (and sometimes call threads lightweight processes). The name "lightweight process" sums up the difference in philosophies between Linux and other systems. To these other operating systems, threads are an abstraction to provide a lighter, quicker execution unit than the heavy process. To Linux, threads are simply a manner of sharing resources between processes (which are already quite lightweight)11. For example, assume you have a process that consists of four threads. On systems with explicit thread support, there might exist one process descriptor that in turn points to the four different threads. The process descriptor describes the shared resources, such as an address space or open files. The threads then describe the resources they alone possess. Conversely, in Linux, there are simply four processes and thus four normal task_struct structures. The four processes are set up to share certain resources.

Threads are created like normal tasks, with the exception that the clone() system call is passed flags corresponding to specific resources to be shared:

clone(CLONE_VM | CLONE_FS | CLONE_FILES | CLONE_SIGHAND, 0); The previous code results in behavior identical to a normal fork(), except that the address space, filesystem resources, file descriptors, and signal handlers are shared. In other words, the new task and its parent are what are popularly called threads.

In contrast, a normal fork() can be implemented as

clone(SIGCHLD, 0); And vfork() is implemented as

clone(CLONE_VFORK | CLONE_VM | SIGCHLD, 0); Kernel Threads ¶It is often useful for the kernel to perform some operations in the background. The kernel accomplishes this via kernel threadsstandard processes that exist solely in kernel-space. The significant difference between kernel threads and normal processes is that kernel threads do not have an address space (in fact, their mm pointer is NULL). They operate only in kernel-space and do not context switch into user-space. Kernel threads are, however, schedulable and preemptable as normal processes.

Removal of the Process Descriptor ¶After do_exit() completes, the process descriptor for the terminated process still exists but the process is a zombie and is unable to run. As discussed, this allows the system to obtain information about a child process after it has terminated. Consequently, the acts of cleaning up after a process and removing its process descriptor are separate. After the parent has obtained information on its terminated child, or signified to the kernel that it does not care, the child's task_struct is deallocated.

When it is time to finally deallocate the process descriptor, release_task() is invoked. It does the following:

===. Process descriptors handling ===

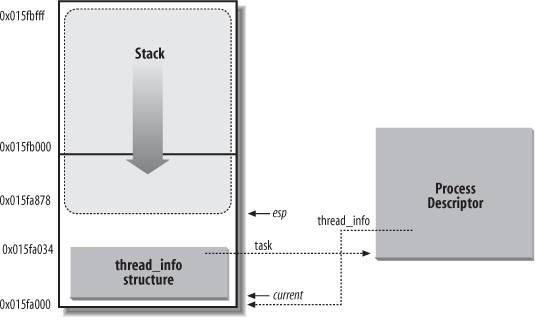

Processes are dynamic entities whose lifetimes range from a few milliseconds to months. Thus, the kernel must be able to handle many processes at the same time, and process descriptors are stored in dynamic memory rather than in the memory area permanently assigned to the kernel. For each process, Linux packs two different data structures in a single per-process memory area: a small data structure linked to the process descriptor, namely the thread_info structure, and the Kernel Mode process stack. The length of this memory area is usually 8,192 bytes (two page frames). For reasons of efficiency the kernel stores the 8-KB memory area in two consecutive page frames with the first page frame aligned to a multiple of 213; this may turn out to be a problem when little dynamic memory is available, because the free memory may become highly fragmented (see the section "The Buddy System Algorithm" in Chapter 8). Therefore, in the 80x86 architecture the kernel can be configured at compilation time so that the memory area including stack and tHRead_info structure spans a single page frame (4,096 bytes).

when stack and thread_info structure are contained in a single page frame, the kernel uses a few additional stacks to avoid the overflows caused by deeply nested interrupts and exceptions.

[JPG image (15.34 KB)] The value of the esp is decreased as soon as data is written into the stack. Because the thread_info structure is 52 bytes long, the kernel stack can expand up to 8,140 bytes.

The C language allows the tHRead_info structure and the kernel stack of a process to be conveniently represented by means of the following union construct:

union thread_union {

struct thread_info thread_info;

unsigned long stack[2048]; /* 1024 for 4KB stacks */

};

The tHRead_info structure shown in Figure 3-2 is stored starting at address 0x015fa000, and the stack is stored starting at address 0x015fc000. The value of the esp register points to the current top of the stack at 0x015fa878.

Identifying the current process ¶movl $0xffffe000,%ecx /* or 0xfffff000 for 4KB stacks */ andl %esp,%ecx movl %ecx,p After executing these three instructions, p contains the tHRead_info structure pointer of the process running on the CPU that executes the instruction.

Most often the kernel needs the address of the process descriptor rather than the address of the thread_info structure. To get the process descriptor pointer of the process currently running on a CPU, the kernel makes use of the current macro, which is essentially equivalent to current_thread_info( )->task and produces assembly language instructions like the following:

movl $0xffffe000,%ecx /* or 0xfffff000 for 4KB stacks */

andl %esp,%ecx

movl (%ecx),p

Another advantage of storing the process descriptor with the stack emerges on multiprocessor systems: the correct current process for each hardware processor can be derived just by checking the stack, as shown previously. Earlier versions of Linux did not store the kernel stack and the process descriptor together. Instead, they were forced to introduce a global static variable called current to identify the process descriptor of the running process. On multiprocessor systems, it was necessary to define current as an arrayone element for each available CPU.

The process list ¶Another useful macro, called for_each_process, scans the whole process list. It is defined as:

#define for_each_process(p) \

for (p=&init_task; (p=list_entry((p)->tasks.next, \

struct task_struct, tasks) \

) != &init_task; )

Just a moment

We seem to know the list macro before following explains

How Processes Are Organized ¶The runqueue lists group all processes in a TASK_RUNNING state. When it comes to grouping processes in other states, the various states call for different types of treatment, with Linux opting for one of the choices shown in the following list.

Processes in a TASK_STOPPED, EXIT_ZOMBIE, or EXIT_DEAD state are not linked in specific lists. There is no need to group processes in any of these three states, because stopped, zombie, and dead processes are accessed only via PID or via linked lists of the child processes for a particular parent.

Processes in a TASK_INTERRUPTIBLE or TASK_UNINTERRUPTIBLE state are subdivided into many classes, each of which corresponds to a specific event. In this case, the process state does not provide enough information to retrieve the process quickly, so it is necessary to introduce additional lists of processes. These are called wait queues and are discussed next.

== Wait queues ===

Wait queues are implemented as doubly linked lists whose elements include pointers to process descriptors. Each wait queue is identified by a wait queue head, a data structure of type wait_queue_head_t:

struct _ _wait_queue_head {

spinlock_t lock;

struct list_head task_list;

};

typedef struct _ _wait_queue_head wait_queue_head_t;

Because wait queues are modified by interrupt handlers as well as by major kernel functions, the doubly linked lists must be protected from concurrent accesses, which could induce unpredictable results. Synchronization is achieved by the lock spin lock in the wait queue head.

Elements of a wait queue list are of type wait_queue_t:

struct _ _wait_queue {

unsigned int flags;

struct task_struct * task;

wait_queue_func_t func;

struct list_head task_list;

};

typedef struct _ _wait_queue wait_queue_t;

For solvin Thundering Herd Problem, there are two kinds of sleeping processes: exclusive processes (denoted by the value 1 in the flags field of the corresponding wait queue element) are selectively woken up by the kernel, while nonexclusive processes (denoted by the value 0 in the flags field) are always woken up by the kernel when the event occurs.

process wishing to wait for a specific condition can invoke any of the functions shown in the following list.

The sleep_on( ) function operates on the current process:

void sleep_on(wait_queue_head_t *wq)

{

wait_queue_t wait;

init_waitqueue_entry(&wait, current);

current->state = TASK_UNINTERRUPTIBLE;

add_wait_queue(wq,&wait); /* wq points to the wait queue head */

schedule( );

remove_wait_queue(wq, &wait);

}

For instance, the wake_up macro is essentially equivalent to the following code fragment:

void wake_up(wait_queue_head_t *q)

{

struct list_head *tmp;

wait_queue_t *curr;

list_for_each(tmp, &q->task_list) {

curr = list_entry(tmp, wait_queue_t, task_list);

if (curr->func(curr, TASK_INTERRUPTIBLE|TASK_UNINTERRUPTIBLE,

0, NULL) && curr->flags)

break;

}

}

The list_for_each macro scans all items in the q->task_list doubly linked list, that is, all processes in the wait queue. For each item, the list_entry macro computes the address of the corresponding wait_queue_t variable. The func field of this variable stores the address of the wake-up function, which tries to wake up the process identified by the task field of the wait queue element. If a process has been effectively awakened (the function returned 1) and if the process is exclusive (curr->flags equal to 1), the loop terminates. Because all nonexclusive processes are always at the beginning of the doubly linked list and all exclusive processes are at the end, the function always wakes the nonexclusive processes and then wakes one exclusive process, if any exists.[1]

[2] By the way, it is rather uncommon that a wait queue includes both exclusive and nonexclusive processes.

Process Switch ¶The set of data that must be loaded into the registers before the process resumes its execution on the CPU is called the hardware context .

The set of data that must be loaded into the registers before the process resumes its execution on the CPU is called the hardware context . The hardware context is a subset of the process execution context, which includes all information needed for the process execution.In Linux, a part of the hardware context of a process is stored in the process descriptor, while the remaining part is saved in the Kernel Mode stack.

Old versions of Linux took advantage of the hardware support offered by the 80x86 architecture and performed a process switch through a far jmp instruction[3] to the selector of the Task State Segment Descriptor of the next process. While executing the instruction, the CPU performs a hardware context switch by automatically saving the old hardware context and loading a new one. But Linux 2.6 uses software to perform a process switch for the following reasons:

[4] far jmp instructions modify both the cs and eip registers, while simple jmp instructions modify only eip.

Task State Segment ¶The 80x86 architecture includes a specific segment type called the Task State Segment (TSS), to store hardware contexts. Although Linux doesn't use hardware context switches, it is nonetheless forced to set up a TSS for each distinct CPU in the system. This is done for two main reasons:

The thread field ¶At every process switch, the hardware context of the process being replaced must be saved somewhere. It cannot be saved on the TSS, as in the original Intel design, because Linux uses a single TSS for each processor, instead of one for every process.

Thus, each process descriptor includes a field called thread of type thread_struct, in which the kernel saves the hardware context whenever the process is being switched out. As we'll see later, this data structure includes fields for most of the CPU registers, except the general-purpose registers such as eax, ebx, etc., which are stored in the Kernel Mode stack.

we assume that prev points to the descriptor of the process being replaced, and next to the descriptor of the process being activated

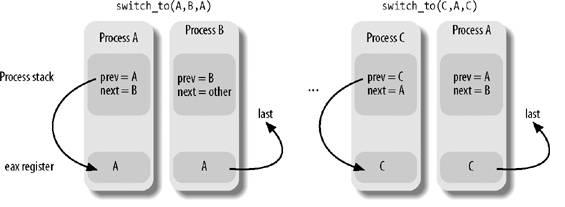

The switch_to macro ¶First of all, the macro has three parameters, called prev, next, and last. You might easily guess the role of prev and next: they are just placeholders for the local variables prev and next, that is, they are input parameters that specify the memory locations containing the descriptor address of the process being replaced and the descriptor address of the new process, respectively.

What about the third parameter, last? Well, in any process switch three processes are involved, not just two. Suppose the kernel decides to switch off process A and to activate process B. In the schedule( ) function, prev points to A's descriptor and next points to B's descriptor. As soon as the switch_to macro deactivates A, the execution flow of A freezes.

Later, when the kernel wants to reactivate A, it must switch off another process C (in general, this is different from B ) by executing another switch_to macro with prev pointing to C and next pointing to A. When A resumes its execution flow, it finds its old Kernel Mode stack, so the prev local variable points to A's descriptor and next points to B's descriptor. The scheduler, which is now executing on behalf of process A, has lost any reference to C. This reference, however, turns out to be useful to complete the process switching (see Chapter 7 for more details).

The last parameter of the switch_to macro is an output parameter that specifies a memory location in which the macro writes the descriptor address of process C (of course, this is done after A resumes its execution). Before the process switching, the macro saves in the eax CPU register the content of the variable identified by the first input parameter prevthat is, the prev local variable allocated on the Kernel Mode stack of A. After the process switching, when A has resumed its execution, the macro writes the content of the eax CPU register in the memory location of A identified by the third output parameter last. Because the CPU register doesn't change across the process switch, this memory location receives the address of C's descriptor. In the current implementation of schedule( ), the last parameter identifies the prev local variable of A, so prev is overwritten with the address of C.

[JPG image (14.24 KB)]

movl prev, %eax

movl next, %edx

pushfl

pushl %ebp

movl %esp,484(%eax)

The 484(%eax) operand identifies the memory cell whose address is the contents of eax plus 484.

movl 484(%edx), %esp

movl $1f, 480(%eax)

pushl 480(%edx)

jmp _ _switch_to

1. Here process A that was replaced by B gets the CPU again: it executes a few instructions that restore the contents of the eflags and ebp registers. The first of these two instructions is labeled as 1:

1:

popl %ebp

popfl

Notice how these pop instructions refer to the kernel stack of the prev process. They will be executed when the scheduler selects prev as the new process to be executed on the CPU, thus invoking switch_to with prev as the second parameter. Therefore, the esp register points to the prev's Kernel Mode stack.

Copies the content of the eax register (loaded in step 1 above) into the memory location identified by the third parameter last of the switch_to macro:

movl %eax, last

As discussed earlier, the eax register points to the descriptor of the process that has just been replaced.[5]

The _ _switch_to ( ) function ¶This function call is different from the average function call, though, because _ _switch_to( ) takes the prev_p and next_p parameters from the eax and edx registers (where we saw they were stored), not from the stack like most functions. To force the function to go to the registers for its parameters, the kernel uses the _ _attribute_ _ and regparm keywords, which are nonstandard extensions of the C language implemented by the gcc compiler. The _ _switch_to( ) function is declared in the include /asm-i386 /system.h header file as follows:

_ _switch_to(struct task_struct *prev_p,

struct task_struct *next_p)

_ _attribute_ _(regparm(3));

1. Executes the code yielded by the _ _unlazy_fpu( ) macro (see the section "Saving and Loading the FPU , MMX, and XMM Registers" later in this chapter) to optionally save the contents of the FPU, MMX, and XMM registers of the prev_p process.

_ _unlazy_fpu(prev_p);

1. Executes the smp_processor_id( ) macro to get the index of the local CPU , namely the CPU that executes the code. The macro gets the index from the cpu field of the tHRead_info structure of the current process and stores it into the cpu local variable.

1. Loads next_p->thread.esp0 in the esp0 field of the TSS relative to the local CPU; as we'll see in the section "Issuing a System Call via the sysenter Instruction " in Chapter 10, any future privilege level change from User Mode to Kernel Mode raised by a sysenter assembly instruction will copy this address in the esp register:

init_tss[cpu].esp0 = next_p->thread.esp0;

1. Loads in the Global Descriptor Table of the local CPU the Thread-Local Storage (TLS) segments used by the next_p process; the three Segment Selectors are stored in the tls_array array inside the process descriptor (see the section "Segmentation in Linux" in Chapter 2).

cpu_gdt_table[cpu][6] = next_p->thread.tls_array[0];

cpu_gdt_table[cpu][7] = next_p->thread.tls_array[1];

cpu_gdt_table[cpu][8] = next_p->thread.tls_array[2];

1. Stores the contents of the fs and gs segmentation registers in prev_p->thread.fs and prev_p->thread.gs, respectively; the corresponding assembly language instructions are:

movl %fs, 40(%esi)

movl %gs, 44(%esi)

The esi register points to the prev_p->thread structure.

1. If the fs or the gs segmentation register have been used either by the prev_p or by the next_p process (i.e., if they have a nonzero value), loads into these registers the values stored in the thread_struct descriptor of the next_p process. This step logically complements the actions performed in the previous step. The main assembly language instructions are:

movl 40(%ebx),%fs

movl 44(%ebx),%gs

The ebx register points to the next_p->thread structure. The code is actually more intricate, as an exception might be raised by the CPU when it detects an invalid segment register value. The code takes this possibility into account by adopting a "fix-up" approach (see the section "Dynamic Address Checking: The Fix-up Code" in Chapter 10).

1. Loads six of the dr0,..., dr7 debug registers [6] with the contents of the next_p->thread.debugreg array. This is done only if next_p was using the debug registers when it was suspended (that is, field next_p->thread.debugreg7 is not 0). These registers need not be saved, because the prev_p->thread.debugreg array is modified only when a debugger wants to monitor prev:

[7] The 80x86 debug registers allow a process to be monitored by the hardware. Up to four breakpoint areas may be defined. Whenever a monitored process issues a linear address included in one of the breakpoint areas, an exception occurs.

if (next_p->thread.debugreg[7]){

loaddebug(&next_p->thread, 0);

loaddebug(&next_p->thread, 1);

loaddebug(&next_p->thread, 2);

loaddebug(&next_p->thread, 3);

/* no 4 and 5 */

loaddebug(&next_p->thread, 6);

loaddebug(&next_p->thread, 7);

}

1. Updates the I/O bitmap in the TSS, if necessary. This must be done when either next_p or prev_p has its own customized I/O Permission Bitmap:

if (prev_p->thread.io_bitmap_ptr || next_p->thread.io_bitmap_ptr)

handle_io_bitmap(&next_p->thread, &init_tss[cpu]);

1. Terminates. The _ _switch_to( ) C function ends by means of the statement:

return prev_p;

The corresponding assembly language instructions generated by the compiler are:

movl %edi,%eax

ret

The prev_p parameter (now in edi) is copied into eax, because by default the return value of any C function is passed in the eax register. Notice that the value of eax is thus preserved across the invocation of _ _switch_to( ); this is quite important, because the invoking switch_to macro assumes that eax always stores the address of the process descriptor being replaced.

The ret assembly language instruction loads the eip program counter with the return address stored on top of the stack. However, the _ _switch_to( ) function has been invoked simply by jumping into it. Therefore, the ret instruction finds on the stack the address of the instruction labeled as 1, which was pushed by the switch_to macro. If next_p was never suspended before because it is being executed for the first time, the function finds the starting address of the ret_from_fork( ) function (see the section "The clone( ), fork( ), and vfork( ) System Calls" later in this chapter).

|

The person you rejected yesterday could make you happy, if you say yes. |